First impressions of Tinkergen MARK robocar

tl;dr: The Tinkergen MARK ($199) is my new favorite starter robocar. It’s got everything — computer vision, deep learning, sensors — and a great IDE and set of guides that make it all easy and fun.

Getting a robocar design for first-time users right is a tricky balance. It should be like a great videogame — easy to pick up, but challenging to master. Too many kits get it wrong one way or another. They’re either too basic and only do Arduino-level stuff like line-following or obstacle avoidance with a sonar sensor, or they’re too complex and require all sorts of toolchain setups and training to do anything useful at all.

In this post, I list three that do it best — Zumi, MARK, and the Waveshare Piracer. Of those, the Piracer is really meant for more advanced users who want to race outdoors and are comfortable with Python and Linux command lines — it really only makes the hardware side of the equation easier than a fully DIY setup. Zumi is adorable but limited to the Jupyter programming environment running via a webserver on its own RaspberryPi Zero, which can be a little intimidating (and slow).

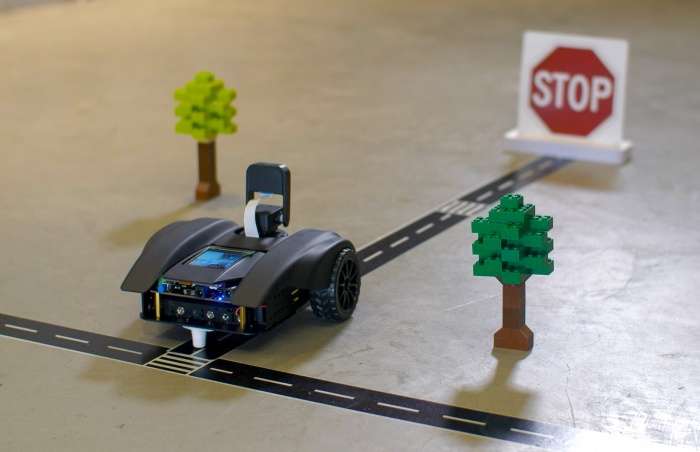

But the Tinkergen MARK gets the balance just right. Like the others, it comes as a very easy to assemble kit (it takes about 20 minutes to screw the various parts together and plug in the wires). Like Zumi, it starts with simple motion control, obstacle detection and line following, but it also as some more advanced functions like a two-axis gimbal for its camera and the ability to control other actuators. It also has a built-in screen on the back so you can see what the camera is seeing, with an overlay of how the computer vision is interpreting the scene.

Where MARK really shines is the learning curve from basic motion to proper computer vision and machine learning. This is thanks to its web-based IDE and tutorial environment.

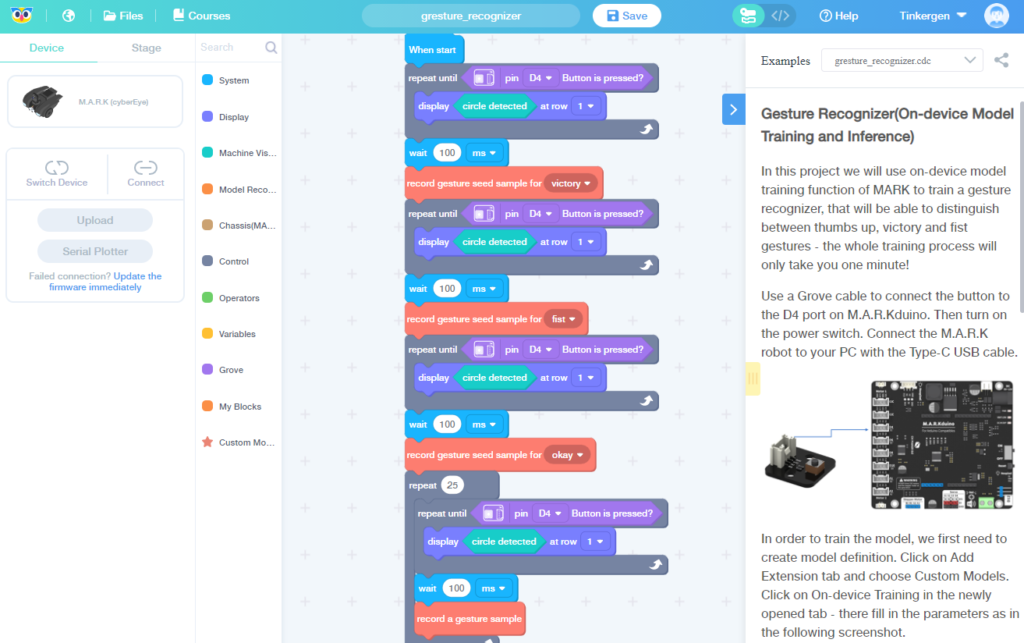

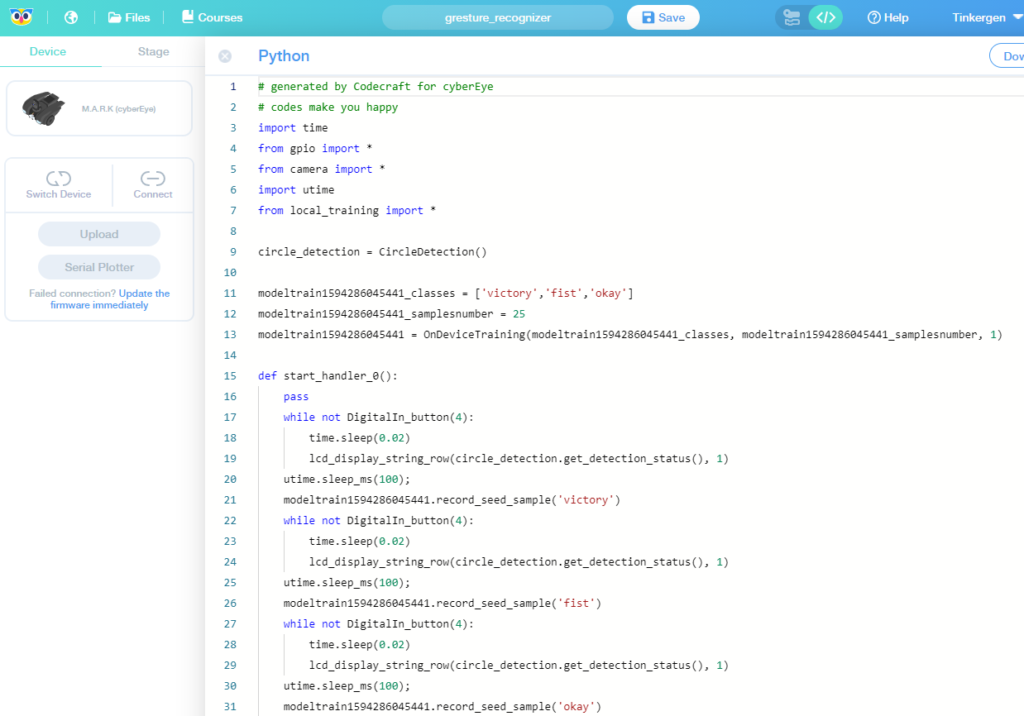

Like a lot of other educational robotics kits designed for students, it defaults to a visual programming environment that looks like Scratch, although you can click an icon at the top and it switches to Python.

Videos and guides are integrated into the web interface and there are a series of courses that you can run through at your own pace. There is a full autonomous driving course that starts with simple lane-keeping and goes all the way to traffic signs and navigation in a city-street like environement.

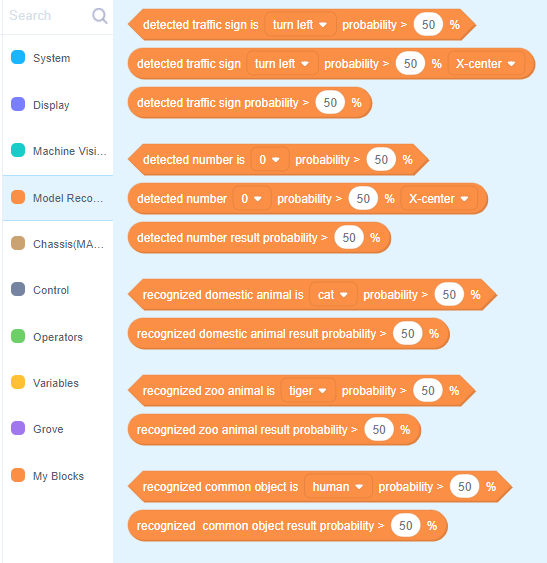

Where MARK really shines is in the number of built-in computer vision and deep learning functions. Pre-trained networks include recognizing traffic signs, numbers, animals and other common objects:

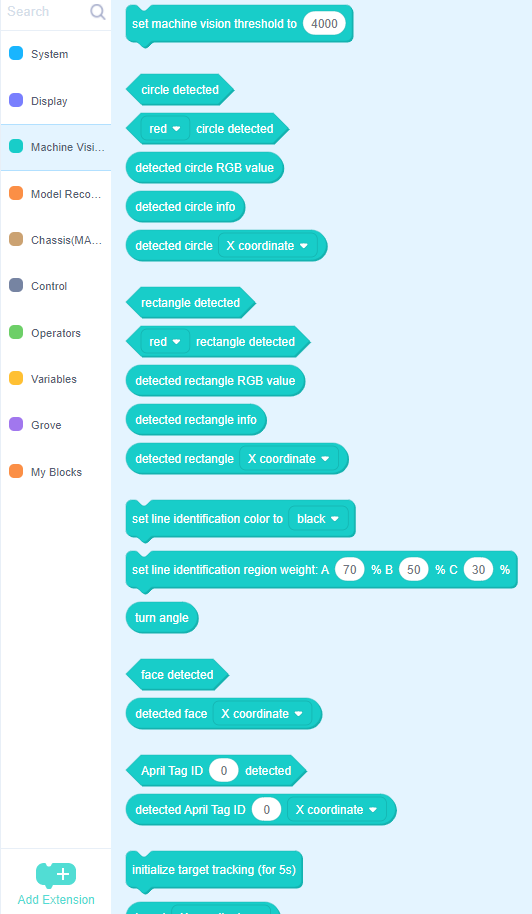

Built-in computer vision modules include shapes, colors, lines, faces, Apriltags and targets. Also supported is both visual line following (using the camera) or sensor line following using the IR emitter/receiver pairs on the bottom of the car.

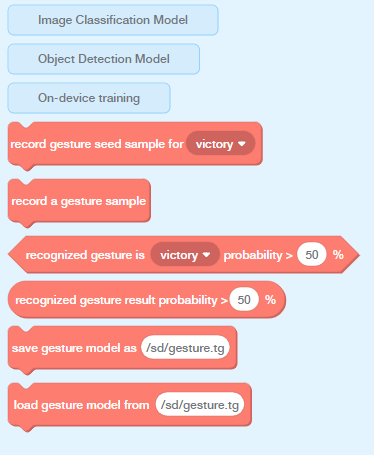

In addition, you can can train it to identify new objects and gestures by recording images on the device and then training a deep learning network on your PC, or even training on the MARK itself for simpler objects.

I got the track mat as well with the kit, which is the right size and contrast to dial in your code so it performs well. Recommended.

In short, this is the best robocar kit I’ve tried — it’s got very polished hardware and software, a surprisingly powerful set of features and great tutorials. Plus it looks great and is fun to use, in large part due to the screen at the top that shows you what the car is seeing. A great Holiday present for kids and adults alike — you won’t find a better computer vision and machine learning experimentation package easier to use than this.